MallePad

Investigation of a Multipoint Malleable Touch Surface

In 2004, at the beginnings of my doctoral research, I worked on the MallePad, a side project that was in equal collaboration with Phillip Jeffrey, Nelson Siu, and Ian Stavness. The MallePad was an investigation of a multipoint malleable touch interface, in contrast to traditional (rigid) touchpads. Our research was generally motivated by the following three concepts:

- There are strong opportunities for richer physical input devices;

- Pressure sensitivity is an under-explored channel for data input; and

- The affordances of a compliant input device are relatively unknown.

Our work utilized the Malleable Surface Interface, which was created by Florian Vogt, Timothy Chen, and Reynald Hoskinson under the supervision of Dr. Sidney Fels of the Human Communication Technologies (HCT) Research Laboratory in the Department of Electrical and Computer Engineering at the University of British Columbia (UBC).

Our specific research took many paths: We generated a variety of use cases for the MallePad; we conducted several first-principles user studies with the device; and we designed an assortment of gestures that users might employ on the MallePad. In order to highlight our work while avoiding information overload, I present here only our proposed gestures.

Proposed Gestures

Borrowing from our use cases and the results of our two user studies, we conceived of a set of six gestures that, in comparison to rigid touchpads, the MallePad would prove a more effective input device: single-point and multipoint deformation, single-point translation, pinch, grasp, and rotate. I provide here abbreviated descriptions of each gesture.

Single-Point Deformation

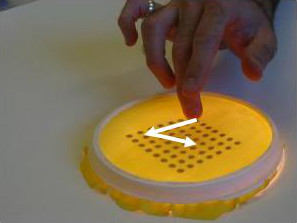

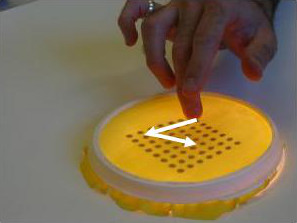

Figure 1: Single-Point deformation gesture.

Position Control: A single-point downward deformation of the surface maps to zooming in. Once zoomed in, the user can zoom out by releasing pressure. This provides an intuitive mapping of the gesture to the change in zoom level. The main issue with this position-control zooming task, however, is that it is impossible to zoom out beyond the starting zoom level. Switching the mapping of downward force to toggle between zoom-in and zoom-out may be undesirable, as it likely would be confusing to the user.

Rate Control: This gesture remains the same as for position control; however, the downward depression is mapped to changing the rate of continuous zoom-in. As the user applies more pressure, the viewport zoom increases in speed. Similarly, as pressure is released, the zoom rate slows down. The problem of unidirectional motion is similar for rate-control: With this mapping, there is no way to zoom out because “no pressure” corresponds to “stop zooming” and remains at the current zoom level.

Continuous Multipoint Deformation

Figure 2: Continuous multipoint deformation gesture.

For a continuous multipoint deformation gesture (Figure 2), the user makes downward deformations into the MallePad surface. There are no set points where the user is pushing; rather, the physical depressions in the MallePad surface maps to deformations of a virtual surface. Applications include continuous mesh deformation for terrain building and object sculpting. Also, the virtual surface could map to any set of spatially arranged parameters that are continuously modulated — for example, in a sound controller, a spatial organization of sound attributes would be affected by deformations in the MallePad surface.

This is a position-control gesture, as deformations in the MallePad map directly to deformations in a virtual surface. The scale of the mapping is dependent on the application.

Single-Point Lateral Translation

Figure 3: Single-Point lateral translation gesture.

Single-point lateral translation (Figure 3) specifies lateral movement in the X/Y coordinate system. The user simply moves her finger across the top of the MallePad in North, South, East, West, or any diagonal direction.

Position Control: The single point of depression is slid across the MallePad surface to specify a lateral movement. This is the same gesture used to perform cursor pointing with traditional touchpads. The advantage of the MallePad is that the lateral motions can be combined with downward depression to integrate two tasks — e.g., panning and zooming. The depth of downward pressure is difficult to maintain as lateral movement is performed, so task integration may not make sense.

Rate Control: The single point of depression is pushed in a lateral direction on the MallePad surface to specify translation movement in that direction. This gesture is similar to that of a laptop track point. The advantage of the MallePad is that the user’s force makes a deformation in the surface and provides tactile feedback about the applied force; this is beneficial for making fine adjustments to the movement rate.

Pinch

Figure 4: Pinch gesture.

The pinch gesture (Figure 4) allows a user to pinch the MallePad’s surface between the thumb and forefinger. This gesture maps to the interaction of grasping a virtual object or creating a bulge in a virtual surface. The user can actually engage and grasp the surface in this gesture, which provides tactile resistance in the pinching motion.

This could also be used to specify the compression of a size region, such as the active area of a window scrollbar or timeline. The pinch specifies a size, while lateral movement changes the location of the affected object or widget. Similarly, specifying a region and location on a timeline — such as the entry-exit points on the timeline of a video editor — could be accomplished through the pinch gesture.

Grasp

Figure 5: Grasp gesture.

Grasping (Figure 5) is similar to pinching (Figure 4), but utilizes multiple fingers. A grasping gesture is most useful for pulling or bulging of a virtual surface. This could be a useful gesture when selecting and manipulating objects in a 3D environment.

Rotate

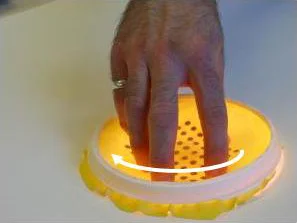

Figure 6: Rotate gesture.

The rotate gesture (Figure 6) allows direct rotation of an object or view. It is accomplished by placing one’s hand on the MallePad in a grasping manner then pushing down and rotating one’s hand in either a clockwise or counter-clockwise direction. Because of the direct interaction, rotation could be directly mapped to control the angle of rotation. However, due to the limitations of wrist rotation the gesture would be more effective as a controlling the rate of rotation of the virtual object.

One issue with the rotation gesture is that it only specifies rotation along a single axis. This does not present a tremendous advantage over mouse input for rotation, since an axis of rotation has to be explicitly selected.